By TechnologyAzure and AWS Monitoring

By IndustryIntegrates with your stack

By InitiativeEngineering & DevOps Teams

TechnicalIt’s easy to get the help you need

Performance testing is a form of software testing that focuses on how a system running the system performs under a particular load. This is not about finding software bugs or defects. Different performance testing types measures according to benchmarks and standards. Performance testing gives developers the diagnostic information they need to eliminate bottlenecks.

In this article you will learn about:

First, it’s important to understand how software performs on users’ systems. There are different types of performance tests that can be applied during software testing. This is non-functional testing, which is designed to determine the readiness of a system. (Functional testing focuses on individual functions of software.)

Load testing measures system performance as the workload increases. That workload could mean concurrent users or transactions. The system is monitored to measure response time and system staying power as workload increases. That workload falls within the parameters of normal working conditions.

Unlike load testing, stress testing — also known as fatigue testing — is meant to measure system performance outside of the parameters of normal working conditions. The software is given more users or transactions that can be handled. The goal of stress testing is to measure the software stability. At what point does software fail, and how does the software recover from failure?

Spike testing is a type of stress testing that evaluates software performance when workloads are substantially increased quickly and repeatedly. The workload is beyond normal expectations for short amounts of time.

Endurance testing — also known as soak testing — is an evaluation of how software performs with a normal workload over an extended amount of time. The goal of endurance testing is to check for system problems such as memory leaks. (A memory leak occurs when a system fails to release discarded memory. The memory leak can impair system performance or cause it to fail.)

Scalability testing is used to determine if software is effectively handling increasing workloads. This can be determined by gradually adding to the user load or data volume while monitoring system performance. Also, the workload may stay at the same level while resources such as CPUs and memory are changed.

Volume testing determines how efficiently software performs with large projected amounts of data. It is also known as flood testing because the test floods the system with data.

During performance testing of software, developers are looking for performance symptoms and issues. Speed issues — slow responses and long load times for example — often are observed and addressed. Other performance problems can be observed:

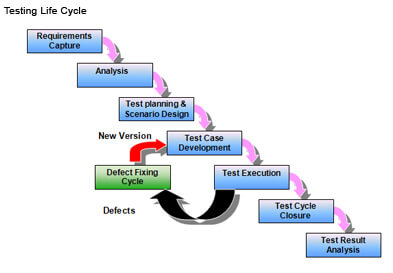

Image credit Gateway TestLabs

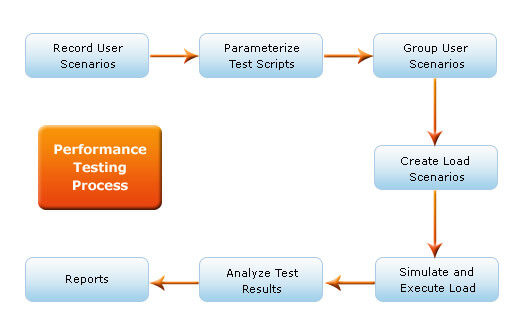

Also known as the test bed, a testing environment is where software, hardware, and networks are set up to execute performance tests. To use a testing environment for performance testing, developers can use these seven steps:

Identifying the hardware, software, network configurations and tools available allows the testing team to design the test and identify performance testing challenges early on. Performance testing environment options include:

In addition to identifying metrics such as response time, throughput and constraints, identify what are the success criteria for performance testing.

Identify performance test scenarios that take into account user variability, test data, and target metrics. This will create one or two models.

Prepare the elements of the test environment and instruments needed to monitor resources.

Develop the tests.

In addition to running the performance tests, monitor and capture the data generated.

Analyze the data and share the findings. Run the performance tests again using the same parameters and different parameters.

Metrics are needed to understand the quality and effectiveness of performance testing. Improvements cannot be made unless there are measurements. Two definitions that need to be explained:

There are many ways to measure speed, scalability, and stability but each round of performance testing cannot be expected to use all of them. Among the metrics used in performance testing, the following often are used:

Total time to send a request and get a response.

Also known as average latency, this tells developers how long it takes to receive the first byte after a request is sent.

The average amount of time it takes to deliver every request is a major indicator of quality from a user’s perspective.

This is the measurement of the longest amount of time it takes to fulfill a request. A peak response time that is significantly longer than average may indicate an anomaly that will create problems.

This calculation is a percentage of requests resulting in errors compared to all requests. These errors usually occur when the load exceeds capacity.

This the most common measure of load — how many active users at any point. Also known as load size.

How many requests are handled.

A measurement of the total numbers of successful or unsuccessful requests.

Measured by kilobytes per second, throughput shows the amount of bandwidth used during the test.

How much time the CPU needs to process requests.

How much memory is needed to process the request.

Perhaps the most important tip for performance testing is testing early, test often. A single test will not tell developers all they need to know. Successful performance testing is a collection of repeated and smaller tests:

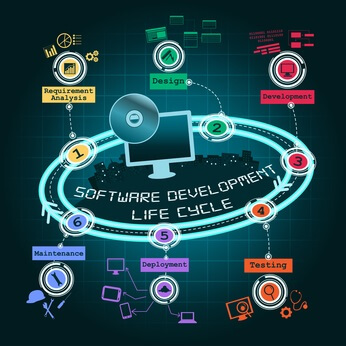

Image credit Varun Kapaganty

In addition to repeated testing, performance testing will be more successful by following a series of performance testing best practices:

Some mistakes can lead to less-than-reliable results when performance testing:

Performance testing fallacies can lead to mistakes or failure to follow performance testing best practices. According to Sofia Palamarchuk, these beliefs can cost significant money and resources when developing software:

As mentioned in the section on performance testing best practices, anticipating and solving performance issues should be an early part of software development. Implementing solutions early will less costly than major fixes at the end of software development.

Adding processors, servers or memory simply adds to the cost without solving any problems. More efficient software will run better and avoid potential problems that can occur even when hardware is increased or upgraded.

Conducting performance testing in a test environment that is similar to the production environment is a performance testing best practice for a reason. The differences between the elements can significantly affect system performance. It may not be possible to conduct performance testing in the exact production environment, but try to match:

Be careful about extrapolating results. Don’t take the small set of performance testing results and assume that they will be the same when elements change. Also, it works in the opposite direction. Do not infer minimum performance and requirements based upon load testing. All assumptions should be verified through performance testing.

Not every performance problem can be detected in one performance testing scenario. But resources do limit the amount of testing that can happen. In the middle are a series of performance tests that target the riskiest situations and have the greatest impact on performance. Also, problems can arise outside of well-planned and well-designed performance testing. Monitoring the production environment also can detect performance issues.

While it is important to isolate functions for performance testing, the individual component test results do not add up to a system-wide assessment. But it may not be feasible to test all the functionalities of a system. A complete-as-possible performance test must be designed using the resources available. But be aware of what has not been tested.

If a given set of users does experience complications or performance issues, do not consider that a performance test for all users. Use performance testing to make sure the platform and configurations work as expected.

Lack of experience is not the only reason behind performance issues. Mistakes are made — even by developers who have created issue-free software in the past. Many more variables come into play — especially when multiple concurrent users are in the system.

Make sure the test automation is using the software in ways that real users would. This is especially important when performance test parameters are changed.

Performance and software testing can make or break your software. Before launching your application, make sure that it is fool-proof. However, no system is ever perfect, but flaws and mistakes can be prevented.

Testing is an efficient way of preventing your software from failing. Now that you’ve learned the different types of performance testing, how it should be done, and its best practices, you need to choose a testing tool to help you achieve standard performance.

Stackify Retrace helps developers proactively improve the software. Retrace aids developers in identifying bottlenecks of the system and constantly observes the application while in the production environment. This way, you can constantly monitor how the system runs while performing improvements.

Try Stackify’s free code profiler, Prefix, to write better code on your workstation. Prefix works with .NET, Java, PHP, Node.js, Ruby, and Python.

Stackify's APM tools are used by thousands of .NET, Java, PHP, Node.js, Python, & Ruby developers all over the world.

Explore Retrace's product features to learn more.

If you would like to be a guest contributor to the Stackify blog please reach out to stackify@stackify.com